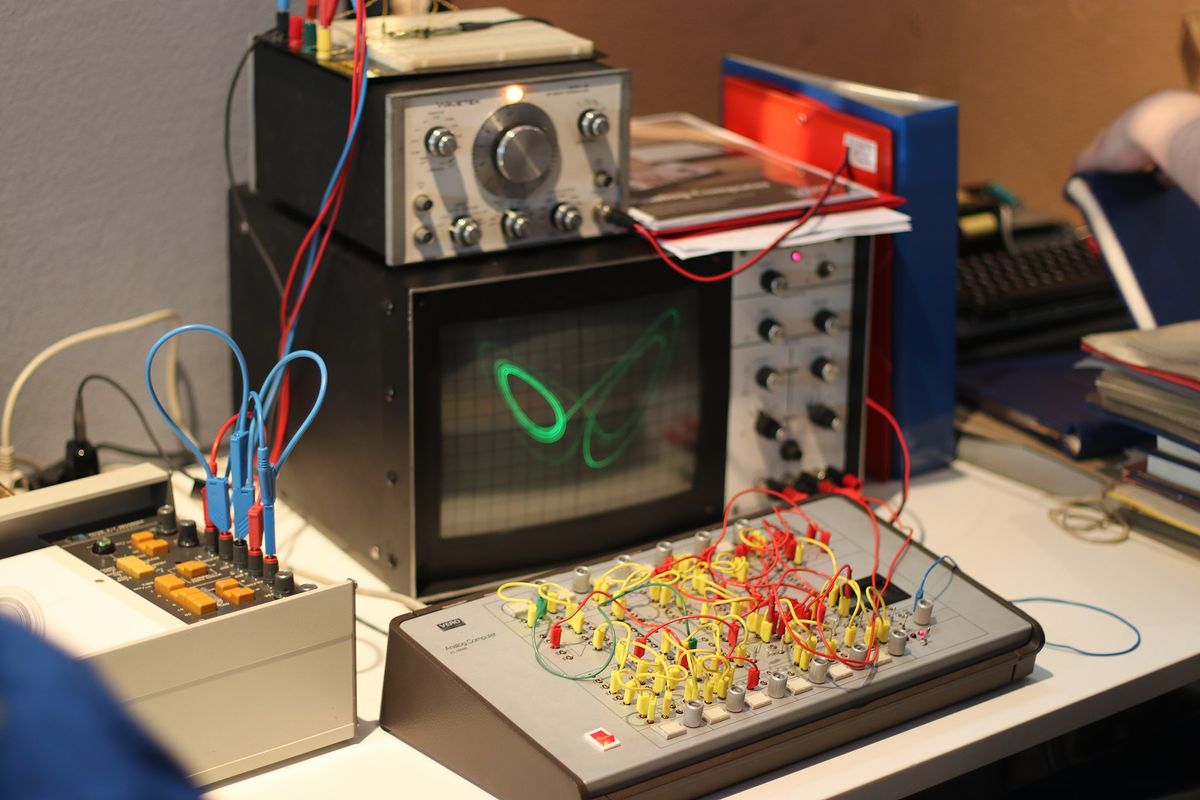

Is it time to bring back the analog computer?

Computer scientists have been predicting a comeback for analog, and not just for tech hobbyists.

A few minutes every morning is all you need.

Stay up to date on the world's Headlines and Human Stories. It's fun, it's factual, it's fluff-free.

In the later part of the 19th century, engineers coined the term “analog” to describe computers that simulated real-world conditions. Analog used varying conditions rather than the binary code of digital computers to calculate approximate results. Back in 2002, the world was storing more info on digital computers than in analog format. And in the early 2010s, the world’s shift from analog to digital computing was pretty much done.

According to the researcher Martin Hilbert from the University of Southern California, within the next century "computational power and ability to store as much information as that which can be stored in the molecules of all humankind's DNA.”

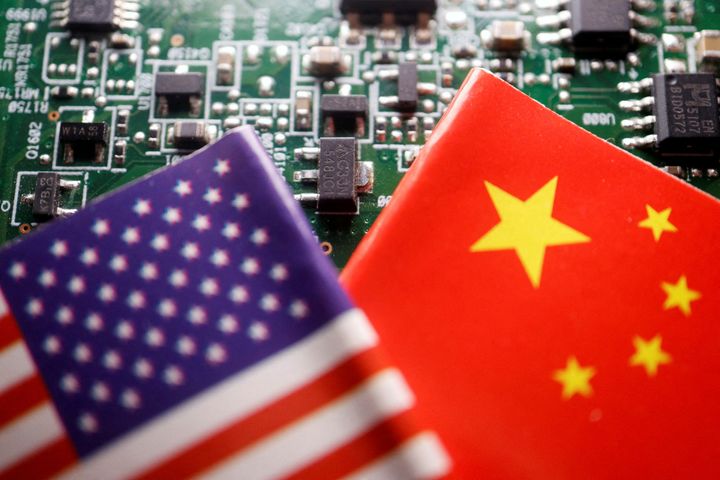

But, computer scientists have been predicting a comeback for analog, and not just for tech hobbyists. Actually, analog computing could give AI the edge that it needs.

For example, a startup called Mythic creates analog AI processors. How does that even work? It may not seem like it, but there are limits to digital computing. Back in the 90s and early 2000s, analog computers were replaced by their digital counterparts because of digital’s lower cost and size, and their accuracy and precision.

But now, the science of AI builds on what’s known as deep neural networks (DNNs), which don’t need that same precision, and which is all about matrix multiplication. Analog computers are really good at matrix multiplication. And with local processing and no need for electricity to store memory, analog chips don’t use as much power as digital chips.

According to Mythic, “Today’s digital AI processors are tremendously expensive to develop and rely on traditional computer architectures, limiting innovation to only the largest technology companies. Inference at the edge requires low latency, low power, and must be cost-effective and compact. Digital processors are just not able to meet these challenging needs of edge AI.”

Plus, the flash memory of the analog chips holds different voltages to simulate how neurons work in the brain. Isn’t the whole point of AI that it works like a human brain?

Comments ()