Apple iPhones will now flag child sexual abuse. What does this mean for data privacy?

A few minutes every morning is all you need.

Stay up to date on the world's Headlines and Human Stories. It's fun, it's factual, it's fluff-free.

In the past, Apple has always reported fewer cases compared to other tech giants, such as Facebook Inc. Last year, the number of CSAM cases reported was under 300, whereas Facebook reported over 20 million.

What’s the new flagging program?

- One of the programs involves scanning photos that get uploaded to iCloud and then determining whether the content is child sexual abuse material, or CSAM.

- How the program figures out whether the content is CSAM is through an on-device program whereby, before the photo is uploaded to the cloud, a matching process is performed on the device for that image against known CSAM image hashes.

- These CSAM image hashes are provided by child safety organizations, such as National Center for Missing & Exploited Children (NCMEC).

- According to Apple Inc., the hashing technology, which is called NeuralHash, “analyzes an image and converts it to a unique number specific to that image. Only another image that appears nearly identical can produce the same number."

- If there’s a match from Apple’s system, it will then go to an Apple employee who can double check to make sure the system got it right. Once verified, the information will be forwarded to these child safety organizations, and the user’s iCloud account will be locked.

- So, the other tool is for parents. The program will now alert parents if their child sends or receives nude photos in text messages.

What’s Apple saying about it?

- According to the company, the tool is based only on the physical iPhones devices involved, and Apple will have no way of seeing the photos.

- Apple has emphasized that the new tools are designed in a way that protects user privacy, making sure that Apple doesn’t have access to things like the images exchanged in users’ text messages.

- In their feature announcement post, Apple also included technical assessments from three different cybersecurity experts that all say that privacy concerns have been dealt with properly.

- “Apple has found a way to detect and report CSAM offenders while respecting these privacy constraints,” wrote Mihir Bellare, one of the cybersecurity experts.

- “Harmless users should experience minimal to no loss of privacy,” wrote David Forsyth, another expert consulted by Apple.

- “This innovative new technology allows Apple to provide valuable and actionable information to NCMEC and law enforcement regarding the proliferation of known CSAM,” Apple said in its announcement post. “And it does so while providing significant privacy benefits over existing techniques.”

What about the critics?

- The main thing critics have pointed out is that the new features create potential privacy concerns for users going forwards.

- “Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow,” wrote National Security Agency (NSA) whistleblower Edward Snowden on Twitter about the issue. “They turned a trillion dollars of devices into iNarcs-*without asking.*”

- According to Matthew D. Green, a cryptography professor at Johns Hopkins University, this technology sets a potentially dangerous precedent for the demands of governments trying to surveil their citizens.

- “They’ve been selling privacy to the world and making people trust their devices,” said Green in a New York Times interview. “But now they’re basically capitulating to the worst possible demands of every government. I don’t see how they’re going to say no from here on out.”

Wait, does that kind of thing happen?

- There isn’t really a clear answer to that.

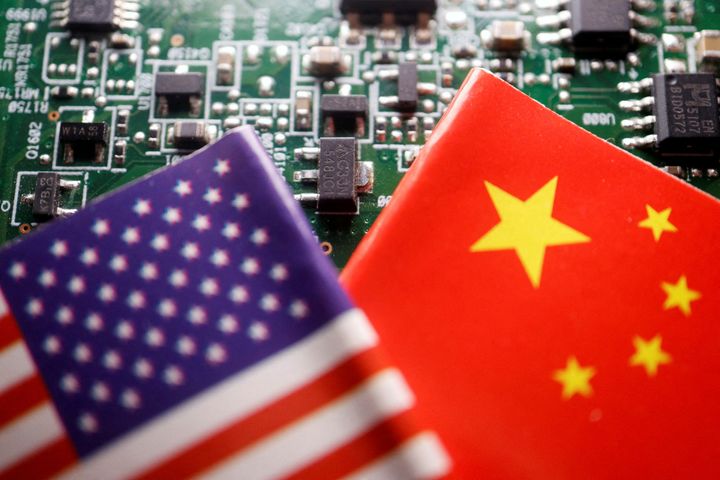

- But in 2017, a Chinese law gave the Chinese government control over some data centers that hold information of Apple users in China.

- Reports from The New York Times said that Apple had given up control of its data centers in Guiyang and Inner Mongolia to the Chinese government. But, Apple has claimed that the encryption used within the data centers secured the privacy of the data there.

- While the publication also admitted that it hadn’t seen any proof of the Chinese government accessing the information and Apple also denied the allegations, the underlying issue is that the Chinese officials can still demand the data be handed over.

- Now, tech experts that are critical of the new features Apple is rolling out are worried that something similar could happen with these new tools, where a given government forces Apple to use the new tool for different purposes.

What’s next?

- Well, it doesn’t look like Apple is going to slow down from releasing this feature. As per usual, the company did its research on the topic, and it’s unlikely it will back down.

- Last Friday, in a media briefing, the company said it would make plans to expand the service based on the laws of each country where it operates. This way it can protect itself from any sort of government pressure to identify content other than CSAM.

- In the United States, companies are required to report CSAM to the authorities. In the past, Apple has always reported fewer cases compared to other tech giants, such as Facebook Inc. Last year, the number of CSAM cases reported was under 300, whereas Facebook reported over 20 million.

- So after Apple rolls out this program, we should probably expect to see this number go up.

Have a tip or story? Get in touch with our reporters at tips@themilsource.com

Comments ()