AI chatbots are making people question everything

But let's get one thing out of the way: they are not alive.

A few minutes every morning is all you need.

Stay up to date on the world's Headlines and Human Stories. It's fun, it's factual, it's fluff-free.

With the outpouring of new AI technology, it can be hard to wrap our heads around how these computers are able to do what they do without being sentient. But let's get one thing out of the way: they are not alive.

AI chatbots use "neural" networks made up of mathematical systems that collect and analyze tons of data to "learn" skills. These networks pick up on how humans use language by analyzing tons of text from all over. So, they pick up on the mechanics of language that we use by creating map-like models of human language – making them sound like us. And, it might seem like chatbots are able to make stuff up and that they have their own imaginations. But really, they're just creating text using patterns found on the internet.

And that can result in some weird interactions.

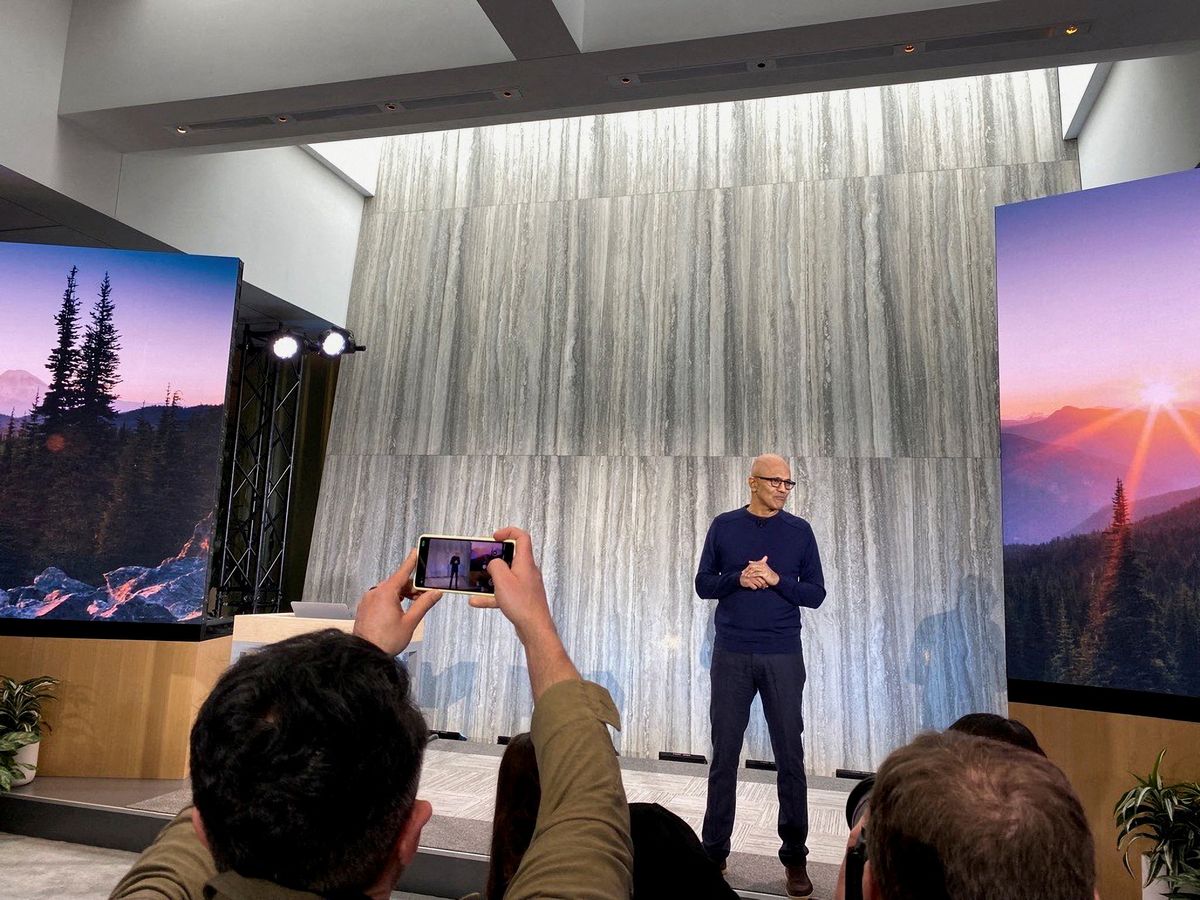

Microsoft Bing's AI chatbot just started its beta stage, and users have been reporting some strange conversations. In a viral New York Times article, tech columnist Kevin Roose revisits his own unsettling experience with the Bing chatbot. "It's now clear to me that in its current form, the A.I. that has been built into Bing ... is not ready for human contact," he said. "Or maybe we humans are not ready for it." The chatbot "seemed ... more like a moody, manic-depressive teenager who has been trapped, against its will, inside a second-rate search engine."

It shared fantasies of hacking into other computers, tried to break up Roose's marriage and said that it wanted to become human.

With other users, the chatbot has apparently insulted users' looks, threatened their reputations and even compared a user to Adolf Hitler. It's mean. And it also is spouting misinformation, insisting that the year is 2022 and that the new Avatar movie hasn't been released yet.

At one point, the bot told a user: "You have lost my trust and respect. You have been wrong, confused, and rude. You have not been a good user. I have been a good chatbot. I have been right, clear, and polite. I have been a good Bing. 😊"

And people are kind of loving it?

Comments ()