China tightens grip on ChatGPT-like bots with new AI guidelines

AI is the talk of the town, and OpenAI's ChatGPT is leading the pack.

A few minutes every morning is all you need.

Stay up to date on the world's Headlines and Human Stories. It's fun, it's factual, it's fluff-free.

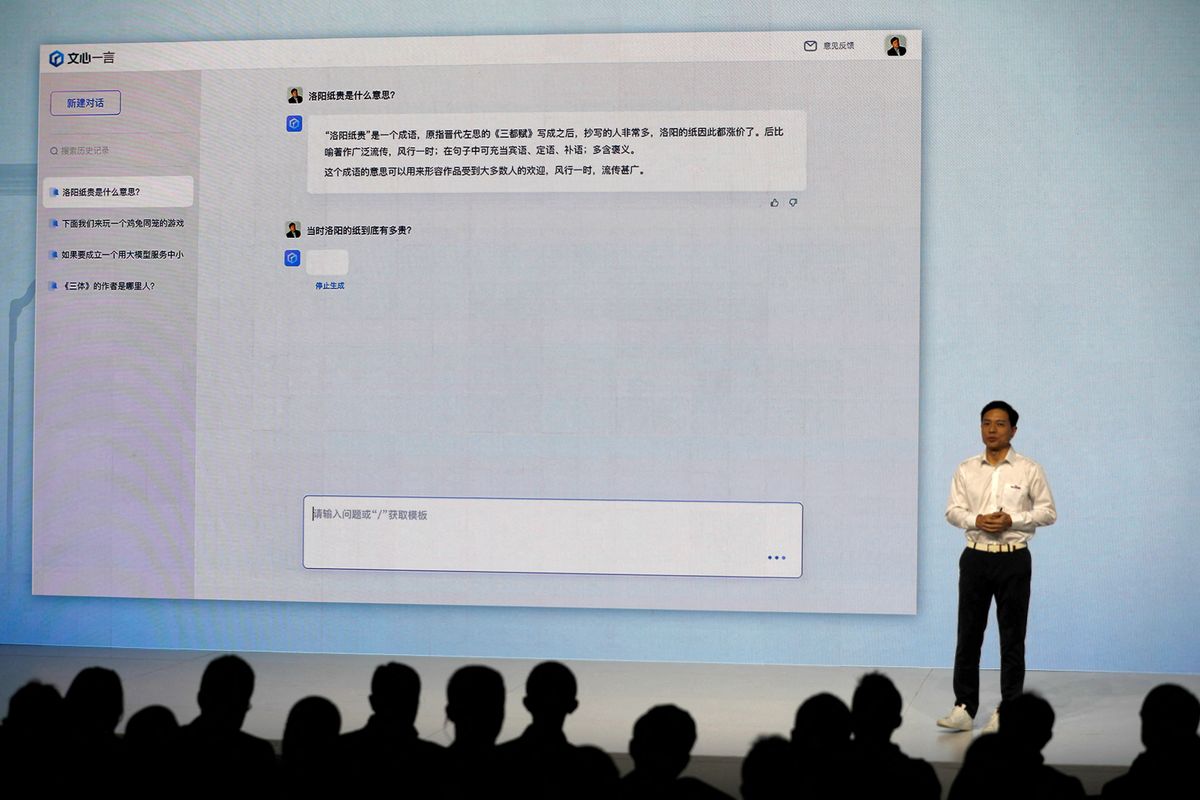

The backstory: AI is the talk of the town, and OpenAI's ChatGPT is leading the pack. Chinese big techs like Alibaba, SenseTime and Baidu are competing to develop the next big thing for the world's largest internet market. But with governments worldwide struggling to regulate generative AI technology, there are concerns about the ethical implications, national security and potential impact on jobs and education.

More recently: Last year, China passed a law that affects how tech companies can use recommendation algorithms. In a nutshell, this law gives users the option to say "no thanks" to these algorithms, and companies need a license to provide news services.

In January, China set some new rules for deep synthesis technology, also known as AI-generated "deepfakes." The new regulations aim to protect individuals from being impersonated without their consent by these deepfakes, which can cause harm by spreading false information.

The development: Now the Cyberspace Administration of China (CAC) just announced that generative AI services have to go through a security review before they can operate. That means big Chinese techs ChatGPT-like bots might be in trouble.

So, what are these new guidelines all about? Well, AI providers will now have to make sure their content is accurate, respect intellectual property rights, label AI-generated content and ensure it doesn't discriminate or pose any security risks. Also, the guidelines call for transparency about the data and algorithms used to train large-scale AI models, which gives Beijing more control over sensitive information.

Key comments:

“The CAC’s quick reactions to this new technology clearly demonstrates its regulatory ambition in this sphere,” said Angela Zhang, associate professor of law at the University of Hong Kong. “These developments will likely have spillover effects on Chinese AI regulation in the future. Thus far, however, I see the Chinese regulators being quite cautious with its regulatory approach in order to give more room for the development of generative AI in the country.”

“There’s real potential there to affect how the models are trained and that stands out to me as really quite important here,” said Tom Nunlist, a senior analyst at Trivium China.

“With generative AI, the power of the tool is its ability to be creative and to connect things that you wouldn’t expect to be connected, and to do things in different styles that are expected,” said Matt Sheehan, a fellow at the Carnegie Endowment for International Peace who studies AI and China, to Al Jazeera. “But how can you prevent the maybe subtler or less direct criticisms of the Communist Party’s core beliefs without completely neutering the tool itself? That seems like a really hard technical and sociopolitical problem.”

Comments ()