AMD showcases new AI chip to compete with Nvidia

Nvidia does have some competition in the AI chip market.

A few minutes every morning is all you need.

Stay up to date on the world's Headlines and Human Stories. It's fun, it's factual, it's fluff-free.

The backstory: As artificial intelligence (AI) continues to advance at full speed, there's a growing need for powerful computing solutions to support its applications. That's where AI chips come into play, and they’re becoming a big deal in the semiconductor industry. These chips are crucial for building AI programs like OpenAI’s ChatGPT.

Unlike traditional CPUs and GPUs, AI chips are GPUs that are purpose-built for handling AI workloads with better performance, energy efficiency and cost-effectiveness. They bring specialized processing power that helps make executing AI algorithms faster and more efficient. This is super important for tasks like language processing, image recognition and machine learning.

More recently: Semiconductor giant Nvidia makes most of the chips for the AI industry. In fact, it has around 95% of the GPU market for machine learning. Those AI chips from the California-based company can cost around US$10,000 or more for the latest and best versions. So, it’s no surprise stocks in the company recently rallied big time, even briefly pushing Nvidia into the US$1 trillion value club with big names like Apple and Meta.

But, Nvidia does have some competition in the AI chip market. For example, tech giant Google has its own custom chips, tensor processing units (TPUs), that it rents out to developers for search and machine learning stuff. In April, the company revealed a supercomputer that it says is better than anything Nvidia has. Meanwhile, big semiconductor players such as Advanced Micro Devices (AMD) and Intel have also jumped into the AI chip game, offering dedicated GPUs designed for AI applications.

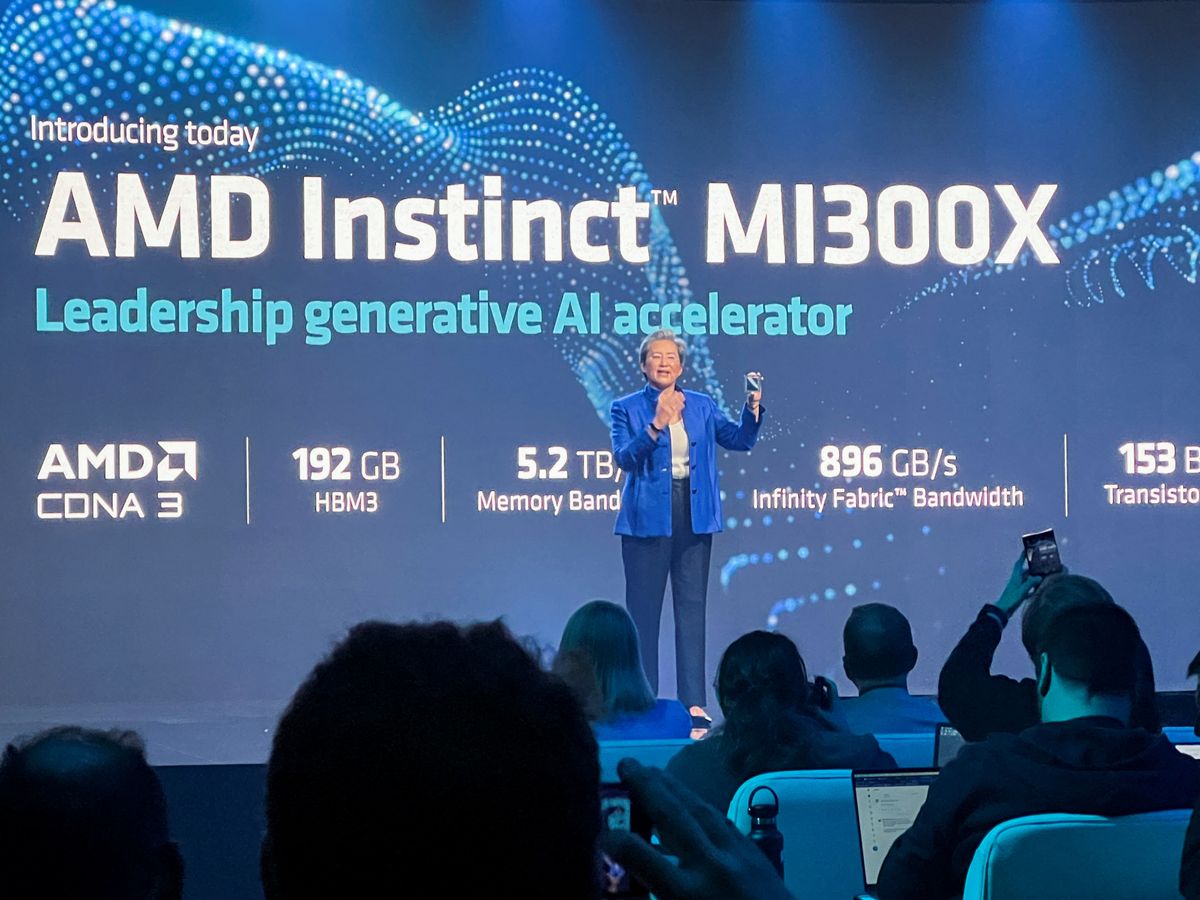

The development: AMD dropped some big news on Tuesday about its latest GPU for AI, the MI300X. It's part of the company’s Instinct MI300 series, and it's causing quite a buzz. This chip is all set to start shipping to customers later this year.

Now, what makes the MI300X so special? Well, it's a real powerhouse when it comes to tackling generative AI tasks. The chip is specifically optimized for those large language models and has some serious memory capacity. In fact, it can support up to 192GB of memory. To put that into perspective, Nvidia’s rival H100 can only handle 120GB.

This could be a game-changer for AMD and might even give Nvidia some pressure in terms of pricing. AMD's VIP customers will actually get a chance to put the MI300X through its paces in the third quarter before it goes into full-blown production in the fourth quarter of this year.

On top of the MI300X news, AMD also shared some updates on its latest Epyc server processors, which are like the muscle of the data center. It's designed to handle intense workloads and deliver top-notch performance. The chipmaker also introduced the Bergamo variant, which is like a turbocharged engine for the cloud. It's specifically built to handle the massive demands of cloud-based applications and services. And speaking of the Bergamo chip, AMD is already shipping those bad boys to some major players, including Meta.

During the presentation, CEO Lisa Su spilled the beans on AMD’s focus on AI as the company's biggest growth opportunity. It expects the market for data center AI “accelerators” (which is what AMD calls its AI chips) to grow like crazy, hitting over US$150 billion by 2027.

Key comments:

“We are still very, very early in the life cycle of AI,” said AMD CEO Lisa Su at the presentation on Tuesday in San Francisco. “The total addressable market for data center AI accelerators will rise fivefold to more than US$150 billion in 2027.”

"I think the lack of a [large customer] saying they will use the MI300 A or X may have disappointed the Street. They want AMD to say they have replaced Nvidia in some design," said Kevin Krewell, principal analyst at TIRIAS Research.

“Now while this is a journey, we’ve made really great progress in building a powerful software stack that works with the open ecosystem of models, libraries, frameworks and tools,” said AMD’s president, Victor Peng.

"We're still working together on where exactly that will land between AWS and AMD, but it's something that our teams are working together on," said Dave Brown, vice president of elastic compute cloud at Amazon. "That's where we've benefited from some of the work that they've done around the design that plugs into existing systems."

Comments ()