Microsoft shows off its first custom AI chips

At its Ignite developer conference, Microsoft just revealed its first self-made AI chip and cloud-computing processor.

A few minutes every morning is all you need.

Stay up to date on the world's Headlines and Human Stories. It's fun, it's factual, it's fluff-free.

The backstory: The race to develop artificial intelligence (AI) technologies and models is on with Big Tech and startups everywhere. One of the biggest names in AI right now is Nvidia, which has designed the most advanced semiconductor chips used to build this kind of technology – called graphics processing units (GPUs). Another is OpenAI, which uses Nvidia’s GPUs. But it’s been reported that Nvidia’s best-performing AI cards are already sold out until next year. There just don’t seem to be enough GPUs coming from Nvidia (or its rivals) to power the whole AI landscape as the competition heats up.

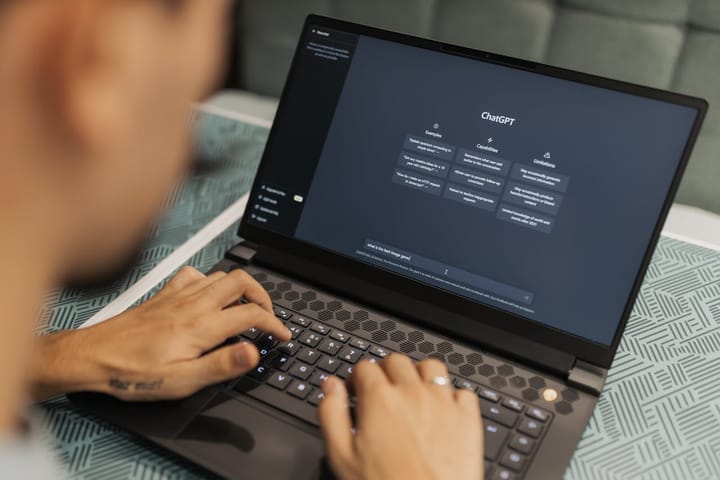

More recently: Since 2019, though, it looks like tech giant Microsoft has been working on its own AI developments that don’t rely on outside GPUs with a project code-named “Athena.” As AI tech has become more and more popular, though, Microsoft has mostly been integrating technologies from the firm OpenAI, which it invests heavily in. But, competitors Google and Amazon have already launched their own AI projects within the past years.

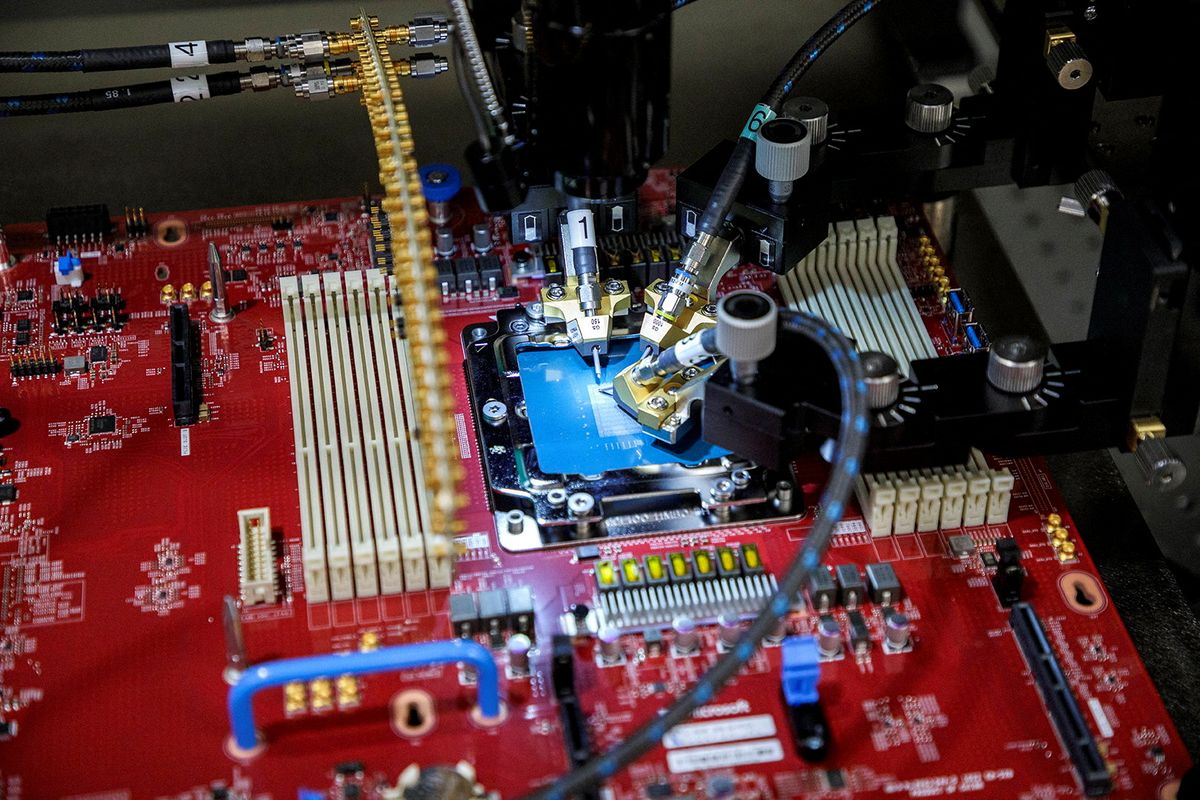

The development: At its Ignite developer conference, Microsoft just revealed its first self-made AI chip and cloud-computing processor. The company said that it’s not planning to sell the chips but instead to use them to facilitate its own software programs and as part of its cloud computing service. It also announced new software for clients to build their own AI assistants.

The AI chip is called Maia 100, and it’s designed to boost AI computing tasks and work as a basis for Microsoft’s "Copilot" service for business software clients and developers who make custom AI services. Maia is supposed to be able to run large language models (LLMs), which is the technology foundation for Microsoft's Azure OpenAI service. Microsoft’s main AI partner, ChatGPT, is also testing Maia. The cloud computing server chip is called Cobalt 100 and is being tested alongside Maia. Cobalt is expected to be used in Microsoft data centers starting sometime next year. With these moves, the company is trying to become less dependent on its partners and outside suppliers.

Key comments:

"This is not something that's displacing Nvidia," said Ben Bajarin, CEO of analyst firm Creative Strategies. "Microsoft has a very different kind of core opportunity here because they're making a lot of money per user for the services.”

“Microsoft is building the infrastructure to support AI innovation, and we are reimagining every aspect of our data centers to meet the needs of our customers,” said Scott Guthrie, Microsoft cloud and AI group EVP, in a statement given to TechCrunch. “At the scale we operate, it’s important for us to optimize and integrate every layer of the infrastructure stack to maximize performance, diversify our supply chain and give customers infrastructure choice.”

Comments ()