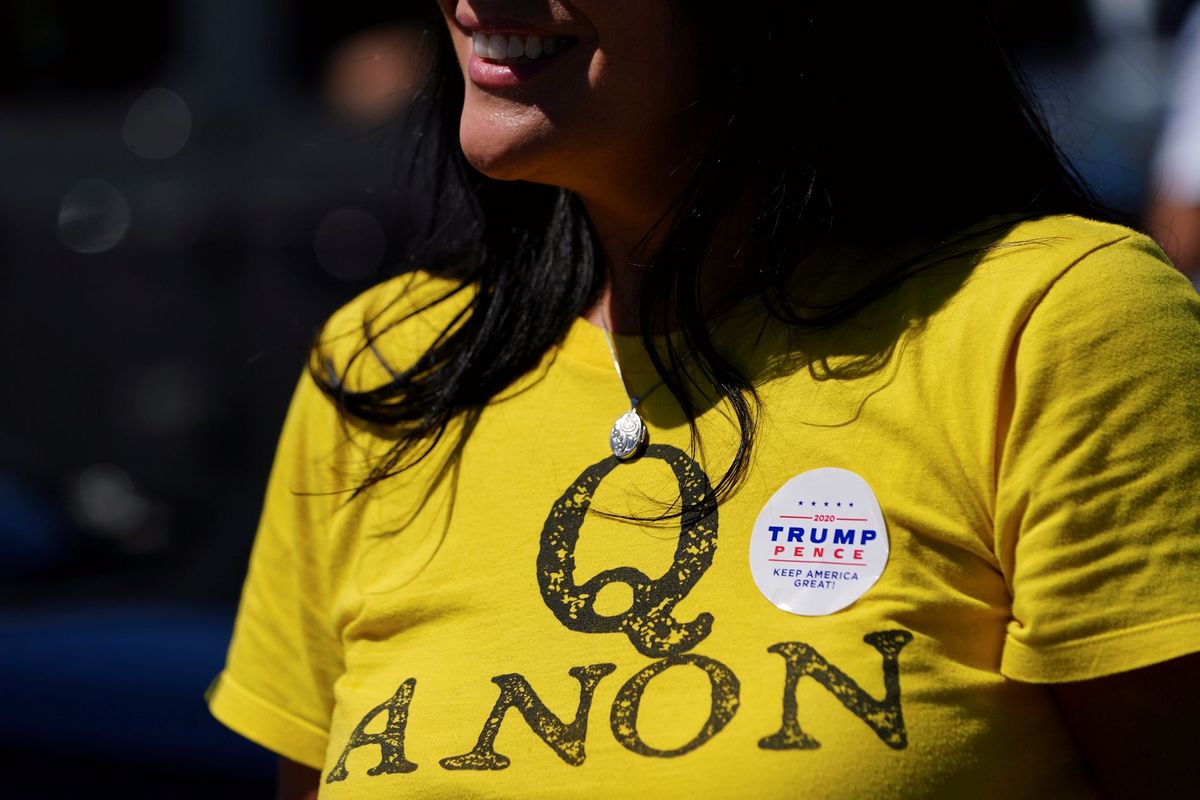

Social media is determined to slow the spread of conspiracy theories like QAnon. Can they?

A few minutes every morning is all you need.

Stay up to date on the world's Headlines and Human Stories. It's fun, it's factual, it's fluff-free.

YouTube, the popular video-sharing platform, has recently announced it wants to limit QAnon and other conspiracy theory content on its site, joining Facebook, Twitter and TikTok in the battle to prevent the spread of misinformation and hateful content.

Social media platforms like YouTube and Twitter exist to give their users the freedom to express themselves however they see fit. That is a crucial part of how these sites grew into the global sensations they are now. Which explains why so many of these platforms have been slow to address one of their biggest weaknesses: the easy dissemination of harmful hate speech and conspiracy theories.

YouTube, the popular video-sharing platform, has recently announced it wants to limit QAnon and other conspiracy theory content on its site, joining Facebook, Twitter and TikTok in the battle to prevent the spread of misinformation and hateful content. It may be a well-intentioned effort, but white supremacist content and QAnon conspiracies aren’t going to disappear without a fight.

YouTube takes on conspiracy theories

In September, writing for Wired, technology author Clive Thompson covered YouTube’s efforts to staunch the flow of conspiracy content on its platform. Noting that “the video giant is awash in misinformation,” Thompson reports that YouTube is tackling the issue by addressing the main broadcaster of such conspiracy theories: its own recommendations algorithm.

It was in early 2019, Thompson writes, that the work began to craft “a grand YouTube project to teach its recommendation AI [Artificial Intelligence] how to recognize the conspiratorial mindset and demote it.”

From QAnon to 5G-related conspiracies, anti-vax propaganda to 9/11 truthers, YouTube is known as the go-to platform for conspiracy theorists. With its ethos of supporting user-created content, it has also been blamed for helping to spread far-right and racist views, which frequently find common ground with conspiracy theories.

While some conspiracy theories, like the belief that the Earth is flat, may seem innocuous (if silly), Thompson notes that the current far-right president of Brazil, Jair Bolsonaro, began raising his political profile in 2016 by posting anti-gay conspiracy videos on YouTube.

The issue is that conspiracy theories rarely stay contained. As Barna William Donovan, a professor of communication and media culture at Saint Peter’s University, told TMS in October, “Research shows that people who are staunch, committed believers in at least one conspiracy theory will also be very open-minded to numerous other such theories.”

So, while a belief in Bigfoot might seem harmless, it often represents the proverbial slippery slope.

Recognizing that its platform had played a significant role in the pervasive dissemination of conspiracy theories, YouTube put its engineers to work on developing an AI system that could halt the spread. Within a year of unleashing the new AI system, YouTube claimed it had cut down on the viewership of conspiracy theories by 70%.

Social media bans QAnon

Despite apparently successful efforts to quell the spread of conspiracy theories, YouTube has struggled, like many other platforms, to stop the rise of QAnon. The pro-Trump conspiracy theory that alleges Democrats and liberal celebrities are members of a Satanic cabal that sexually abuses children is uniquely suited for the social media age.

Like a virus, QAnon has rapidly propagated in the last few years, expanding from an anonymous post on 4chan to a global (albeit, still niche) movement. One of the ways the movement has grown is by attaching itself to genuine anti-sex trafficking movements through the hijacking of hashtags like #SaveTheChildren.

On October 15, YouTube announced it was going to take a more active role in banning QAnon content. Whereas the AI system merely adjusted the site’s recommendations so conspiracy theories didn’t spread as easily, this new move was a far more active and targeted approach.

YouTube’s new policy would bar any conspiracy theories that lead to threats or harassment directed at individuals. QAnon, which frequently labels public figures pedophiles without evidence, falls under this category. Many Q-related posts advocate for the arrest and violent punishment – even execution – of its perceived enemies.

YouTube’s ban follows similar bans by Facebook and Twitter, where the movement has generated considerable attention. In July, Twitter reported it had already removed thousands of QAnon-related accounts. However, the bans haven’t been completely effective, with posters figuring out ways to fly under the radar or simply creating new accounts.

Additionally, the figure known as Q, the unknown person (or people) behind the conspiracy theory, has also encouraged QAnon adherents to hide their ties to the movement. Social media influencer accounts share the Q theories, often unknowingly, under the pretense of raising awareness about child sexual abuse and trafficking.

TikTok takes on hate speech

Another video social media company, TikTok, has recently announced it is seeking to oust conspiracy theories and hate speech from its platform. TikTok has been steadily growing in popularity over the last few years, especially among younger, Gen Z users, with its short, user-created video clips – no longer than 60 seconds.

On October 21, the platform published a blog post entitled “Countering hate on TikTok.” The post says the company is “Taking a stand against hateful ideologies,” which includes neo-Nazism and white supremacy and “content that is hurtful to the LGBTQ+ community.”

The blog post does not specifically mention QAnon or conspiracy theories. However, The New York Times reported the social media platform has also made it clear it intends to limit QAnon supporters from sharing Q content.

For TikTok, as it has been for other social media giants, balancing its users’ freedom to create content with a desire to stop the spread of hate and misinformation will likely prove a tricky path to navigate.

Have a tip or story? Get in touch with our reporters at tips@themilsource.com

Comments ()